AI "IaaS - Intelligence as a Service" - are we there yet ?

A unique milestone: First non-technology company in the US hits a $1Trillion market cap and owns more short term T-bills than Federal Reserve. The CEO of this company turned 94 years old! Now, “Would you like to have Warren Buffet as your financial personal advisor and philosopher? why not?” With simple to use AI frameworks, one can easily create personal advisors of your choice. I did create this personal advisor and ensured to ground the LLM by leveraging RAG and revalidated the responses from Warren by citing shareholders meeting minutes or the famous shareholders letters. Well, it might sound like something very complex to do but the ease of building something new will change forever. [Note: It took me 2 weeks of ramping up on LangChain/Flow, Embeddings, VectorDb, RAG and a bit of fine tuning art. It was fun building it nevertheless. If interested to know more DM me]

I am not a futurist but very optimistic of the creative opportunities one gets by leveraging these tools to re-discover yourself first, understand our own source code and path to self discovery. I very well believe in Amara's Law : “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.”

Fun: Check out this fun debate between two AI systems that I triggered. “Singularity” is pretending to be Conscious while “AI Guru” is not. The level of sophistication in reasoning and spontaneous responses in the moment by recollection is just amazing.

But I also have these questions, which I am sure many would have: Is the Gen AI Hype Cycle of Inflated Expectations subsiding to set the path of trough of disillusionment and to level of enlightenment ? Is early AI busts inevitable to reach next level of maturity in the hype curves ? It looks like we are still at the “pilotitis” phase ? Is the current capex spend on AI by big tech companies as a share of revenue justifiable? Is the “winner takes all” phenomena creeping up and if so, who would that be - the architecture chip providers or infrastructure providers or the application builders ? Is the automatable tasks getting automated faster with AI tools resulting in measurable productivity gains? Are the budgets getting shifted to enable experimentation, to build, adopt and deploy AI applications ? I am sure there are many more. I don’t have all the answers but intellectual curiosity exists to explore the possible answers. This blog content represents my personal reflections only!

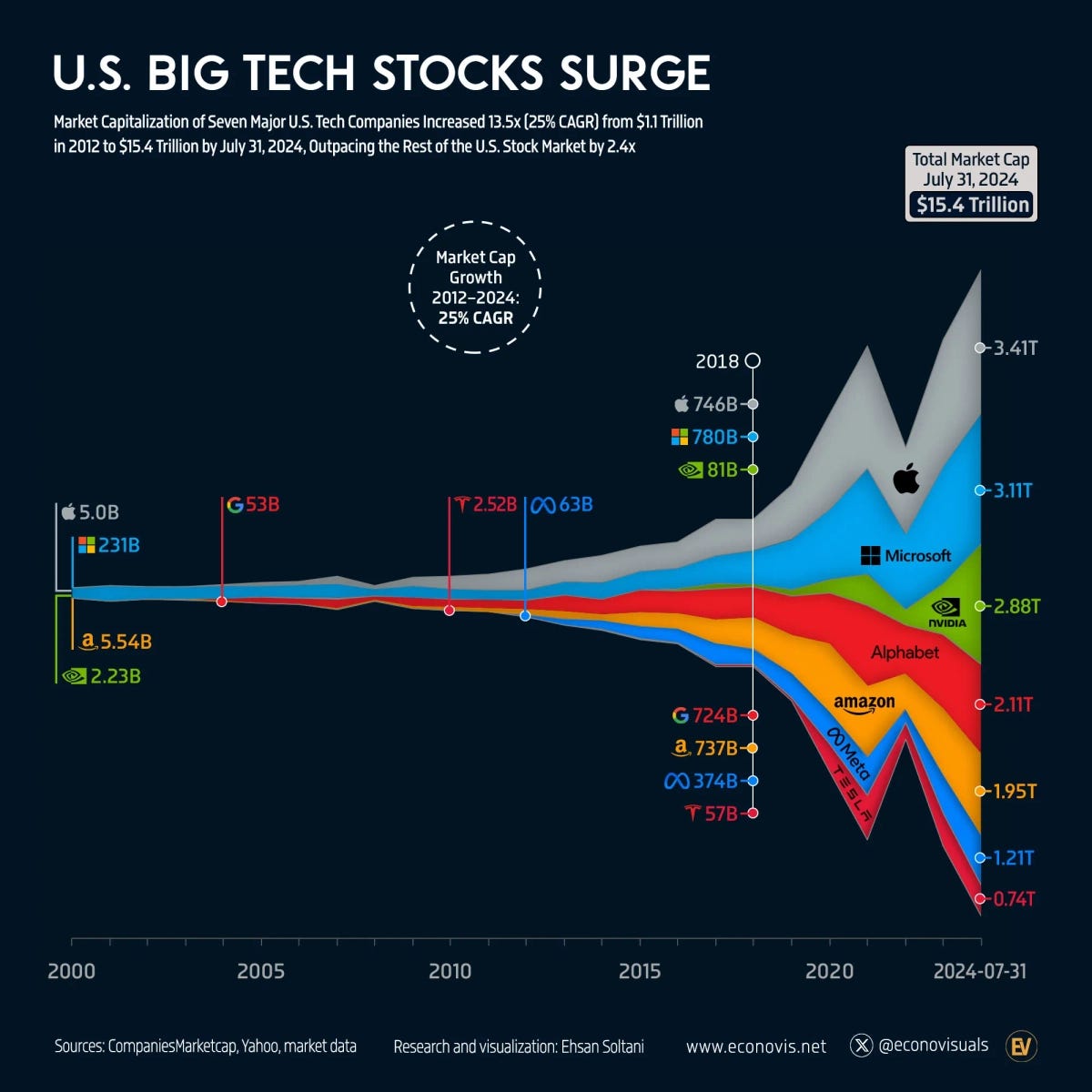

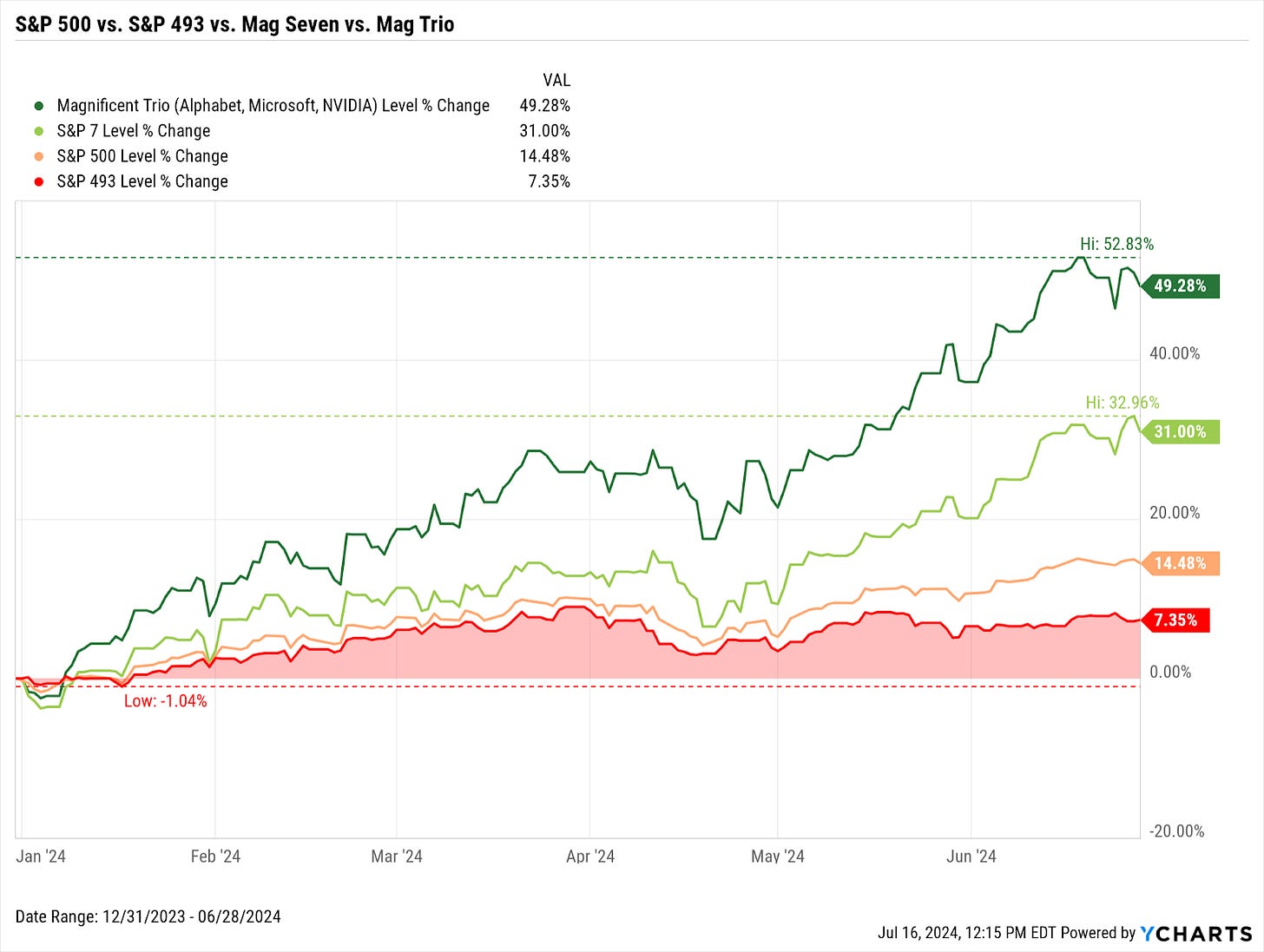

We all accept technology innovations influencing the economy is never felt immediately . It's a progression. But as seen in the past few months, analysts used business quarterly financial metrics to articulate the challenges with AI adoption & lack of immediate ROI. Yes, the future prospects of growth driven by AI innovations enabled the “Magnificent Seven” to dramatically influence S&P 500 returns. [49% of S&P gain].

As expected, market started looking for short term gains by those enterprise companies who could have benefited with the new Gen AI revolution. So, the “Magnificent Seven” got the market love but the adopters of that AI innovation did not immediately see returns! This imbalance is naturally raising the questions of Gen AI readiness for wider enterprise adoption and tangible business impacts.

I strongly believe this recent negative trend is the result of overestimating the short term gains.

So, here is what I believe.

In the past, human intelligence used AI tool kits to deliver an outcome. Now, we are seeing the advancement in these AI technologies with potential to provide Intelligence on demand to address human needs. If you have doubts, just have audio conversion with Chatgpt-4o Or see how many clicks you used to take on Google Search before to find information and now with synthesized data. Easy access to AI tool kits like Github copilots or Gemini Pro lengthy context lengths or non-stop investment dollars entering startups to innovate faster, I feel we will end up with a very fast pace of AI “infrastructure and frameworks” built to expand experimentations, alignment to tangible use cases and eventually building the killer apps that will drive measurable adoptions. This rate of change will be exponential..

We do see new innovations to build ground breaking tool kits powering LLM AI models to robotics to brain interfaces. To name a few, 2+ Million context length with Gemini Pro, combined multimodal inputs via GPT4o, fast with efficiency Gemini Flash for devices, domain specific AI Agents, Open Source models like Llama 3.1, advancement into Robotics like Optimus, Brain computer interfaces via Neural Links and many more. Open AI has 200 million weekly active users with peak usage of their API’s, monthly token usage of Llama has grown 10x this year alone, VertexAI claiming its real production rollouts, xAI’s 100K H100 GPU Cluster Colossus, largest AI cluster till date, turned up in just 122 days — all pointing out the experimentation is in full swing and these innovations will drive further maturity and cost economics enabling adoption.

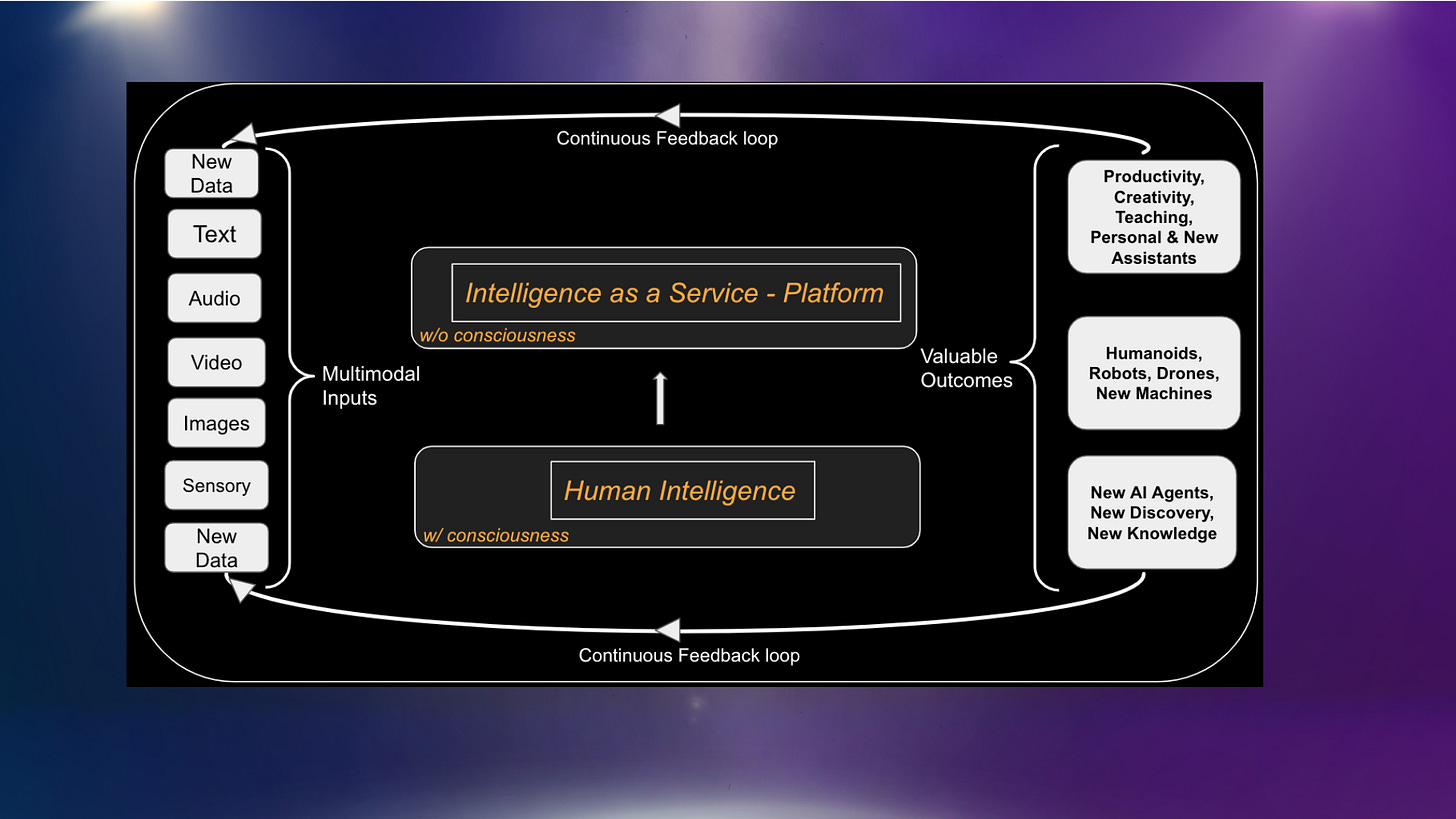

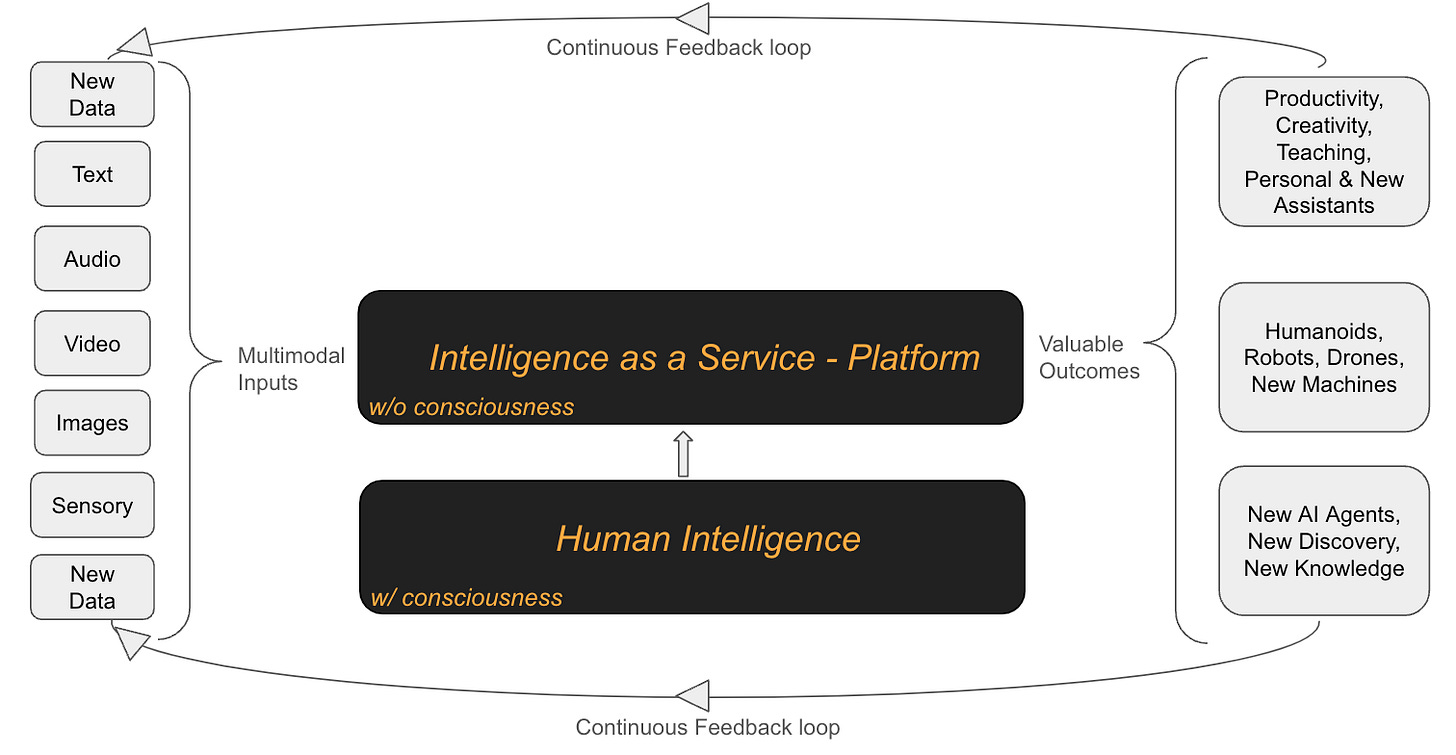

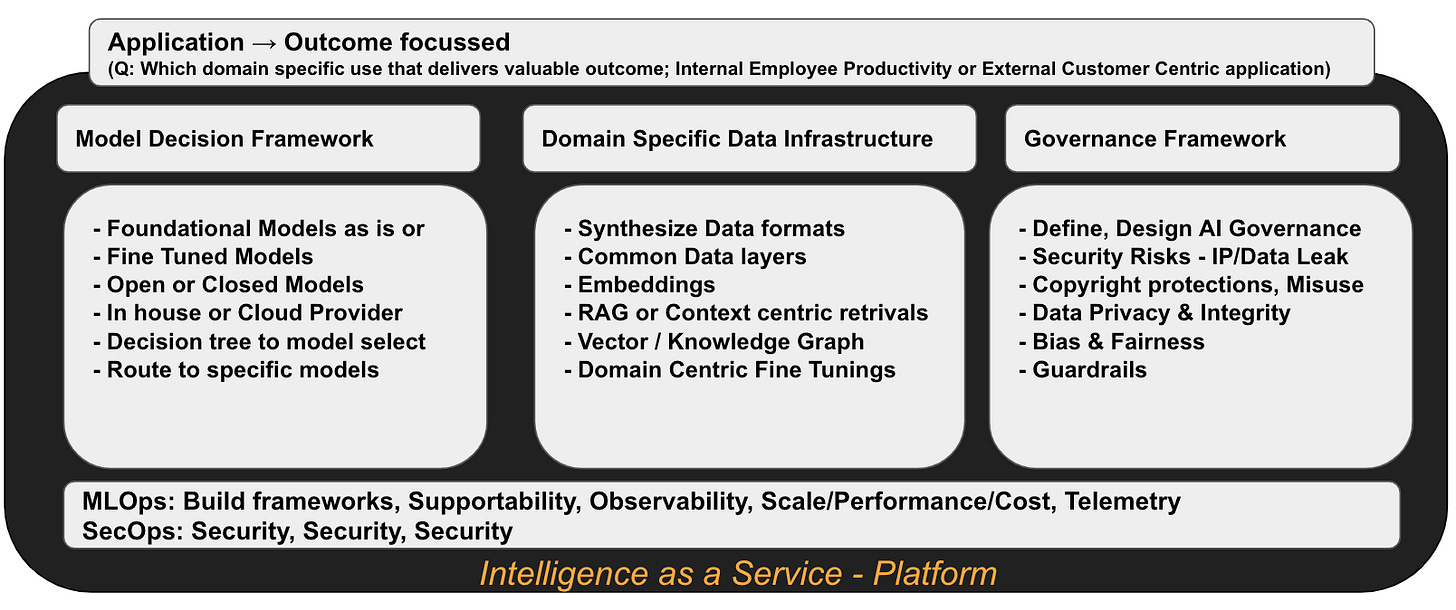

Recent Cloud Computing transition, we saw the era of IaaS/PaaS/SaaS as it relates to delivering valuable applications and transforming businesses. Each of these SaaS delivered outcomes with volumes of data and insights generated. What I believe next is, on top of that framework and with of the Data & new AI technologies, we will start seeing a new wave of delivery model: “Intelligence as a Service” (non-biological). A platform for enterprise (in-house built or aaS) with domain centric data, insights (from various SaaS/Private Infrastructure applications), plumb them into AI LLM Infrastructure (within CSP eco-system or in-house), derive relatable outcomes (internal consumption or external IaaS application). All this influenced still by Human intelligence for now!

Would we then create this endless loop of feeding all modalities of inputs and intelligence back into the system for AI systems to improve its intelligence, exponentially and then understand the world model much more and eventually enter the realm of “Singularity” Or what I call AI Deus ? Something to ponder..a topic for future.

What would the implications of this would be ? We do have some historical context on how technology disrupted day to day life. While many benefited from these disruptions, there are many who got “disrupted” became obsolete.

Internet → e-commerce & beyond → Winners: Infrastructure Platform providers & applications on top of it → Disrupted: Brick and Mortar

Cloud Computing & SaaS → Workload migrations → Winners: Infrastructure Platform providers → Disrupted: Traditional IT Infrastructure & Software vendors

Social Media → Era of entrepreneurial Influencers → Winners: Infrastructure Platform providers → Disrupted: News Media, Social Dilemma

Smartphone & Apps → Human life line → Winners: Device Vendors & Cloud Infrastructure providers for Streaming, Application Services → Disrupted: Blackberry & many more.

Space Transportation → Mission to Mars with Reusability → Winners: SpaceX and new Innovators → Disrupted: Communication Satellite & Space Exploration business

Robotics & Brain Interfaces → Automation & Humanoid era → Winners: Tesla, Amazon & many more → Disrupted: Humans

Similarly now, Gen AI & New AI revolution has the potential to disrupt all the above and naturally, the question would be who are the winners and who will be disrupted beyond recognition. Like how Uber transformed the medallions to worthless, which use cases of AI will transform business beyond recognition? It's a bit foggy right now to get to the bottom of that!. But for sure just like how we adopted SaaS, similarly, there will be winners who would come up with AI applications and deliver via their AI IaaS (Intelligence as a Service) platform and Enterprises will consume the outcomes!

I feel there are three major factors which would directly influence the adoption, effectiveness and business shifts while we continue to embark on exploring those killer apps:

Trust to Adopt: Can I entrust this with company data & Is it safe?

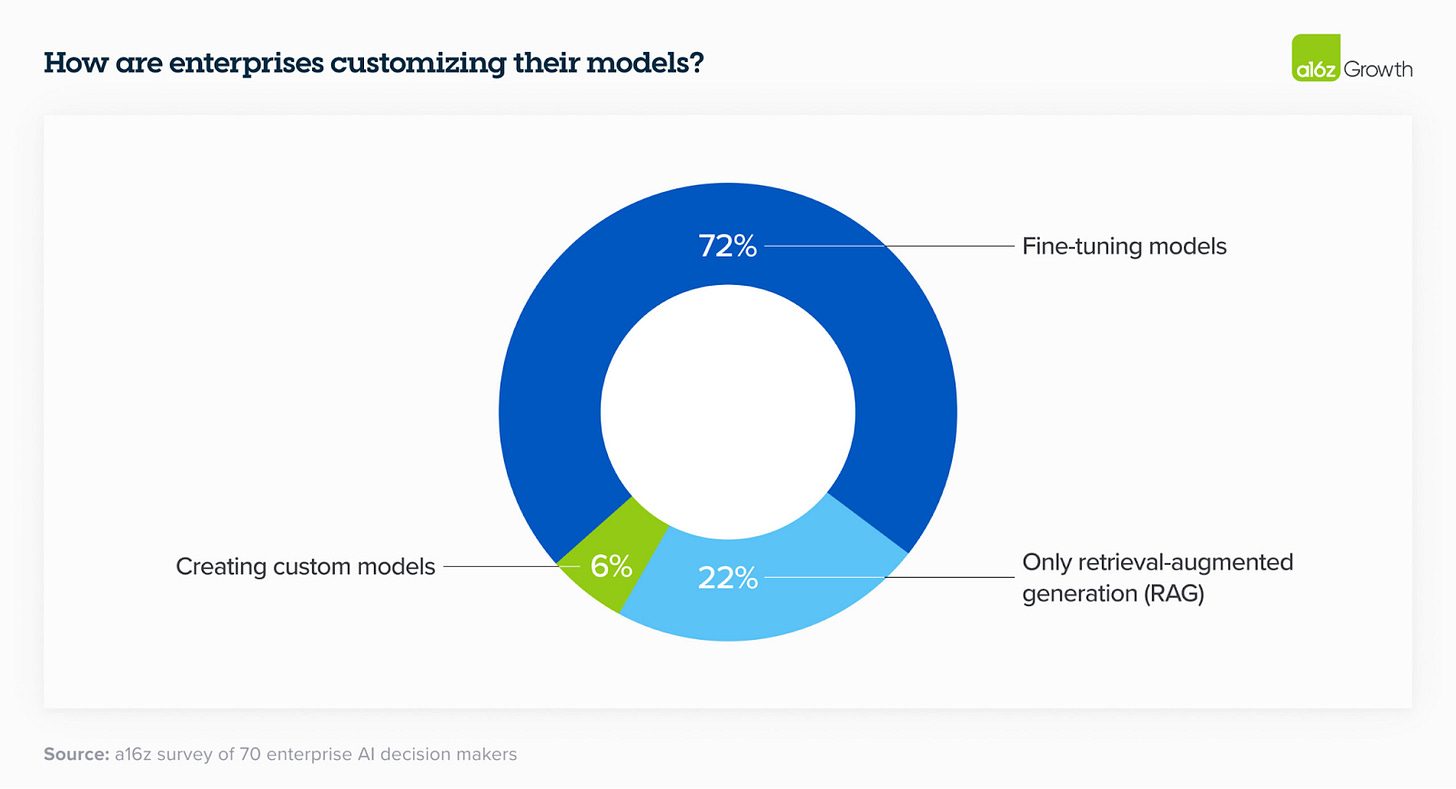

Ease of Customization: Can I effectively fine tune for my specific use cases?

Path to Maturity: Can I substantially save money or make money long term?

The above three are influenced by how you would view this “Intelligence as a Service” platform. What makes up this platform, what inputs drive the outcomes, what are the unit economics, and many more. The below framework is something I feel would be a good starting point. I would start heavily focussing on the forest first and analyze the trees with experimentation!

As it relates to adoption driven by trust, we have some data points on this. We see the same philosophy of bait and catch happening all over again. Free Search engines, free social media apps, free productivity suits were all given to the human population to obtain “personal data”. We happily shared all this with or without consent, with or without understanding the implications. We are the same cusp. ChatGPT was a classic example of enticing the users to leverage the tool kits to generate more data, more context, more insights into the world around us to feed the ever hungry models. Consumer adoption of AI tool kits will continue dramatically and will reach an extent where we will say “Where is AI anymore, it's dead, meaning it's part of my life now without knowing its AI”.

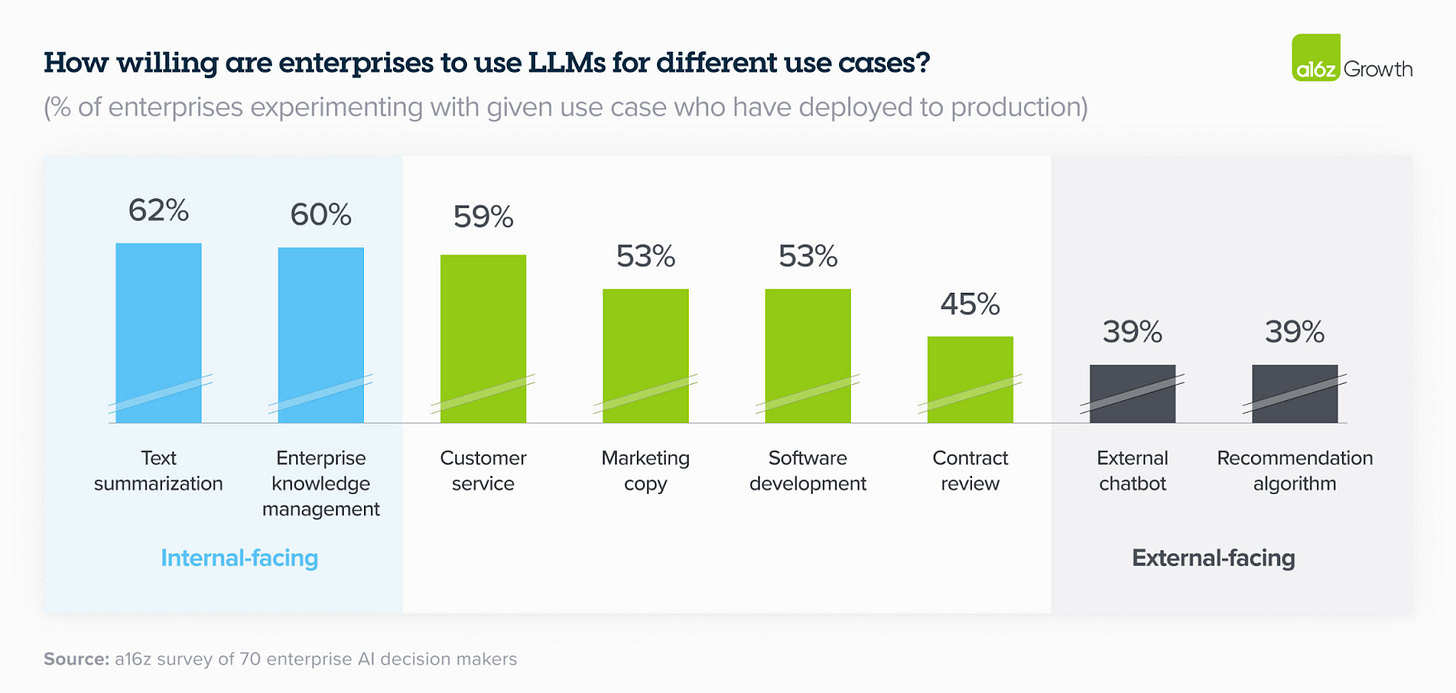

On the other hand, for broader Enterprise adoption, trust is everything. Just like how it took decades to see the transformation of data center workloads to move to the Cloud, we entrusted our enterprise data and shifted the risk of managing it into the hands of CSP ( Cloud Service Providers). Similar journey is underway based on various data points we see in the adoption reports. It will be quite natural to see enterprise workloads & data that are already in the hands of CSP or SaaS providers to be extended for AI Application development & delivery. Some of the following reports do clearly indicate the journey has begun through experimentations & CSP’s will continue to provide the necessary frameworks to glue, build and transform business. You can clearly see a level of alliances getting established - Open AI with Azure, Anthropic with Amazon or Gemini Pro with GCP, while all claim to be open to support “Open Source” models along the way.

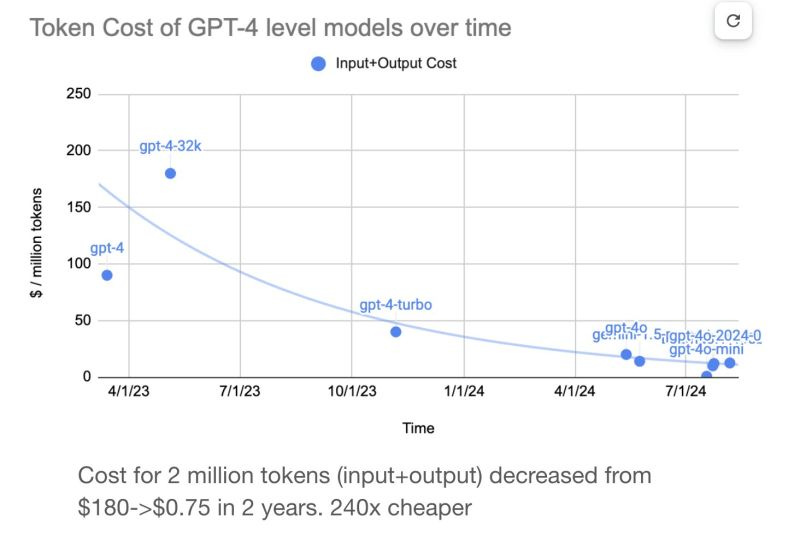

One area that has always fascinated me is around the ROI decisions one has to make in embracing transformative technology. It’s a tough balancing act between short term gains to long term investment to leapfrog. One parameter that surely influences even to continue to experiment is the Cost factor. Like we have seen in past, the simplified SaaS onboarding, activation & usage drove stickiness with the suppliers & expenses soared. We would be marching into similar territory now.

As predicated, the rate of cost optimization already happening with LLM Models is a sheer reflection of how enticing “Intelligence as a Service” Platform would become!

While there are many factors that would influence the overall AI cost, the key contributor is determined by Model usage itself. Obviously if open source models are used, the cost factor shifts to fine tuning, operationalization of AI deployment. For closed LLM, Tokens is the foundational logic which drives the pricing. Every query, prompt and responses are all in-terms of tokens when processed by the models. The process of tokenization drives the overall usage of the LLM models. Natural question would be how would one know how much tokens the application would consume if I am using the managed or closed LLM from a provider ( like OpenAI). This going to be tricky and completely driven by use cases. One could average out the number of tokens used per input and output from the model based on the daily usage patterns. This will give an idea on the total token usage and hence the price. Its clearly going to be iterative cost analysis based on the usage patters and the type of use cases, the model selected and expected outcomes. Few key parameters to watch for: Token size of the input prompt and output responses, Latency, Prompt complexity, Model complexity.

Along with this foundational token usage cost modeling, AI application development will be integrated with potentially RAG frameworks to avoid hallucinations and complex rollouts to bring in multiple LLM models. These additional cost to be factored included Vector Storage, AI LLM gateways to centrally manage access between LLM providers, overall AI Infrastructure, network ingress and egress, and many more. There is tremendous opportunity to innovate in the FinOps domain as it would be a complex model to think of cost optimization from day one and not be an after thought.

In Summary, I feel these trifecta effect will exponentially drive adoption of AI Applications - Model efficiency, ease of use with AI Infrastructure & frameworks, and increase trust to experiment via secure guardrails. The productivity applications are the starting point with a path to broader usage. Just like SaaS drove business transformations, AI driven “Intelligence as a Service” platforms which would leverage multiple data sources from various existing applications and workflows to drive new wave of AI Applications!

Exciting times ahead for sure!