The Digital Gurukul - AI Guru with AI Shishya Parampara ?

"I think a lot of the fears around AI are predicated on the idea that somehow there is a hard takeoff, which is that the minute you turn on an AI system that is capable of human intelligence or super intelligence, it’s going to take over the world within minutes. This is preposterous," exclaimed Yann LeCun. Contrarily, Yoshua Bengio voiced, "What Max and I and others are saying is not, necessarily, there’s going to be a catastrophe but that we need to understand what can go wrong so that we can prepare for it" The ever-pragmatic Melanie Mitchell added, "The whole history of AI has been a history of failed predictions. Back in the 1950s and 60s, people were predicting the same thing about super-intelligent AI and talking about existential risk, but it was wrong then. I’d say it’s wrong now" Max Tegmark, with a hint of caution in his voice, questioned, "What do you actually think the probability is that we are going to get superhuman intelligence, say, in 20 years, say, in 100 years? What is your plan for how to make it safe?”

These brightest minds got together at Munk debate to settle the resolution - "Be it resolved, AI research and development poses an existential threat" . The opposition won by a 4% margin, changing more minds among the audience compared to pre-debate opinions. Lex Fridman and Marc Andreessen had a similar dialogue and one statement stuck with me, I quote Marc saying “I think we're going to need to start thinking about using military-grade security to protect AI data centers. Because if you have a really powerful AI model, and you train it on a massive dataset, and then you lose that data center, that's like losing a nuclear weapon. It's that dangerous.”

Now, where do you stand on this AI risk debate? Do we need government regulation now, or should we adopt a wait-and-see approach?

Every four weeks, I delve into a new facet of AI, sharing my findings and musings. I enjoy reading, experimenting and sharing my learnings in any opportunity possible. All these are my own thoughts with no influence on what I do professionally. Let’s look at how the last four weeks have been..

This time, my Indian roots led me to draw parallels with the Guru-Shishya Parampara (Gurukul framework) from our rich mythology. This framework is a sacred teacher-student relationship that forms the cornerstone of knowledge acquisition and moral upbringing.These enlightened Gurus, powerful enough to drive a nation to glory or bust. Despite their wisdom, have been known to err, resulting in far-reaching consequences, as seen in the epic tale of Guru Dronacharya from Mahabharata.

Are we in the process of creating a Digital Gurukul? As we keep aligning these AI models to be a clone of human intelligence and beyond, with all datasets we got including our myths and realities, our cultures and traditions, our success and failures and with IQ’s beyond Einstein - Would we end up creating AI Guru’s? I've been contemplating the next wave of innovation: AI Agents, instructed by these centralized AI Gurus, undertaking specific tasks. This Digital Gurukul is on a global scale, transcending geographical and cultural boundaries. The fear expressed by some scientists is of an uncontrolled AI Guru, whose intelligence and goals may not align with ours, potentially driving AI Agents to destructive tasks. Do you see the same way?

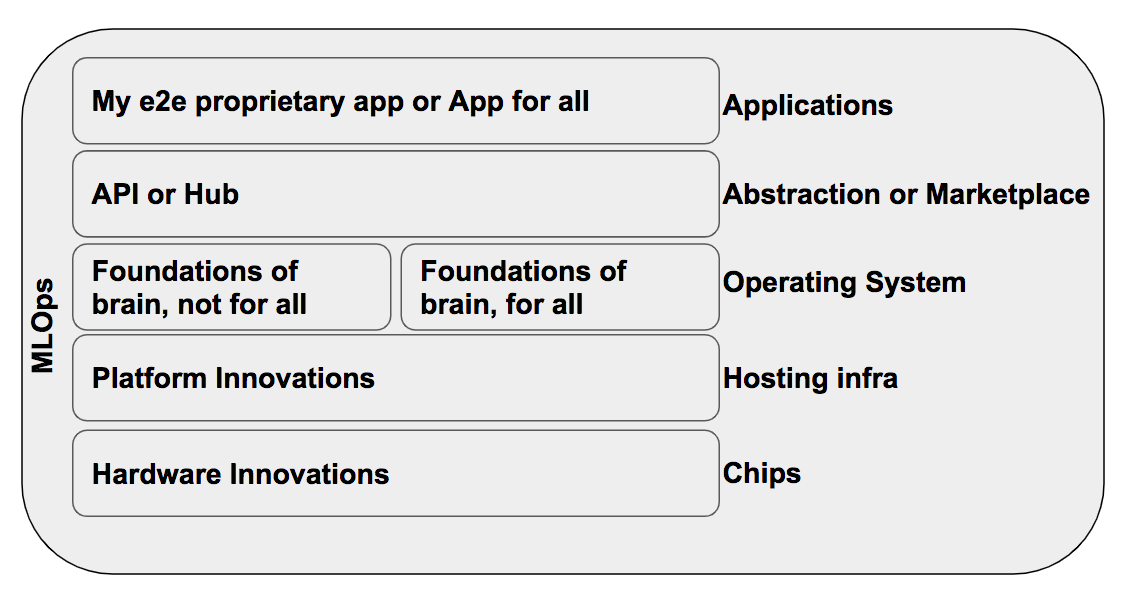

Having said that, let's take a look at what all has been happening in last four weeks that gives any sign how we are pushing the envelope here. To gain knowledge and experience in these fields, I have embraced experimentation and learning. To stay abreast of the continuous influx of disruptive innovations in both the commercial and research realms, I have adopted a simple mental model.

From this simple mental model, I try to find ways to accomplish these below outcomes and see what innovations happening in which areas of the stack. This will help to ensure the business outcome based exploration is performed.

Outcome 1: I like to build a proprietary application that will be accessible to our end customers. The purpose of this application is to facilitate conversations between the users and our systems, enabling them to perform tasks that enhance productivity and operational simplicity. To achieve this, I aim to create a custom-trained foundational model, which I refer to as the "brain," utilizing my private data sets specifically tailored for this application. To carry out these operations effectively, I plan to leverage an end-to-end (e2e) platform that offers flexibility in utilizing both open source and closed source foundational models, along with a comprehensive operational toolkit (ops). Ensuring the security of our private data sets and trained outcomes is of utmost importance, and therefore, we must establish robust security infrastructure.

Outcome 2: I just want to leverage this e2e application which takes my natural language and translates into specific outcomes - my simple english text into Sanskrit language or take my text to create a video or audio. I care less about how it all happens as long as it boosts my creativity or just enables productivity boost.

Outcome 3: I am unclear on which foundational model to use for my applications. I want to experiment with various open source models from a marketplace or Hub. Most importantly, I want a collaborative environment across my company with private datasets, common models sharing, avoiding reinventing the wheels, and common operational tools shared across teams.

Outcome 4: I want to use as a service frameworks to streamline my ML dev tasks and ML ops tasks to ensure continuous delivery of high performing production models. Naturally, this is critical when I am aiming to deliver an e2e proprietary application for my end customer base incorporating into my own existing service/product.

Let me give a quick summary of innovations on each of the layers of the mental model.

Hardware Innovations

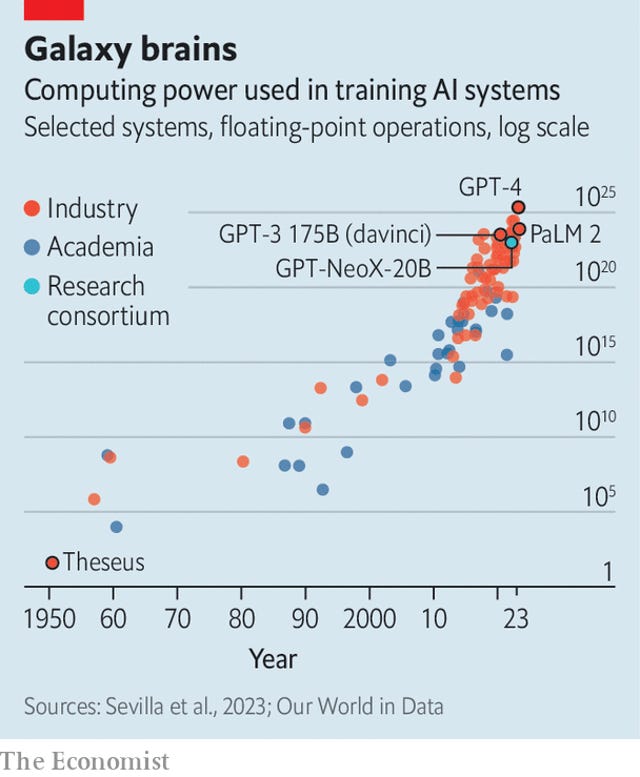

Analysis points to the rate of computing power needs doubling every 6 to 10 months. At this rate, it will become too hard to train a large language model with more parameters to make it “AI Guru”. As Mr Altman put it in April, reflecting on the history of giant-sized AI Model: “I think we’re at the end of an era.”

The battle to have more powerful but cost effective GPU/TPU and other AI Chips is on the rise. AMD MI300 is the next closest alternative to Nvidia GPU frenzy demands. Need to see the next few quarters of all these play out.

Academia created a new model Guanaco, from llama on a single GPU in a day without sacrificing much, if any, performance.

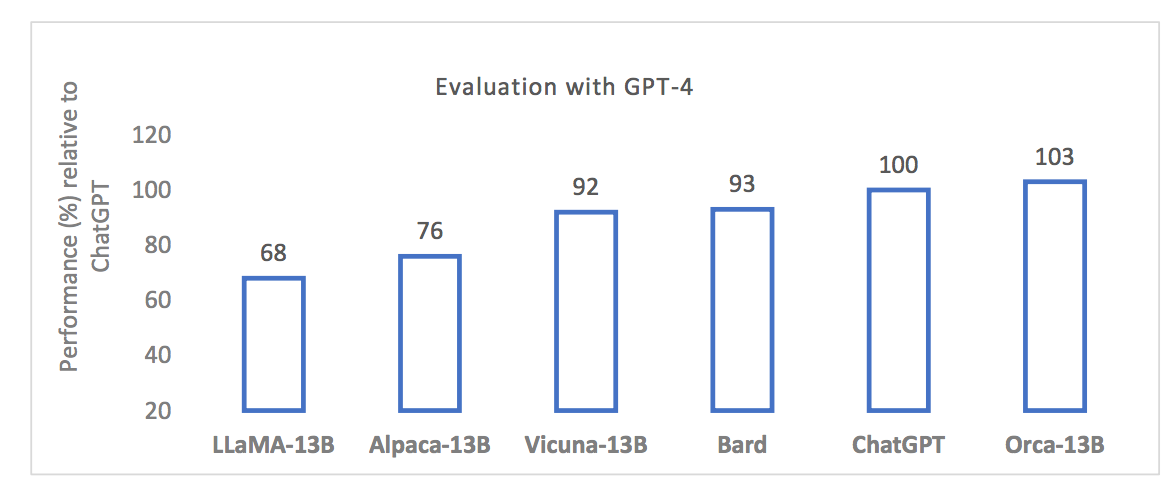

Creative way to have one uber LFM with a bunch of smaller parameter LLMs that are influenced by the uber LLM. This is where I was fascinated to hear about the “Teacher & Student (Guru & Shishya)” concept adopted in creating this new LLM called Orca, from Microsoft Research. Orca is a 13-billion parameter model that learns to imitate the reasoning process of LFMs. Orca reaches parity with ChatGPT. Research indicates that learning from step-by-step explanations, whether these are generated by humans or more advanced AI models, is a promising direction to improve model capabilities and skills.

Platform Innovations

Incumbent cloud providers are constantly gearing with new capabilities on their eco-system platforms to enable anyone to bring in their base model to train or re-train or leverage any existing pre-trained model. Check it out here. for latest trends on GPU computing infrastructure providers.

Databricks acquiring Mosaic ML is another example of consolidations in the space to drive better platforms for training, inference and all efficiencies needed to build custom models.

CoreWeave, an Nvidia funded startup, inked 200M$ funding to further enhance its 8000 servers GPU Cloud infrastructure claiming 80% cheaper.

Startup's like Lambda Labs, Jarvis Labs venturing in building the GPU farms to accommodate the demands for AI Model training.

Foundational Models (brain behind everything) Innovations

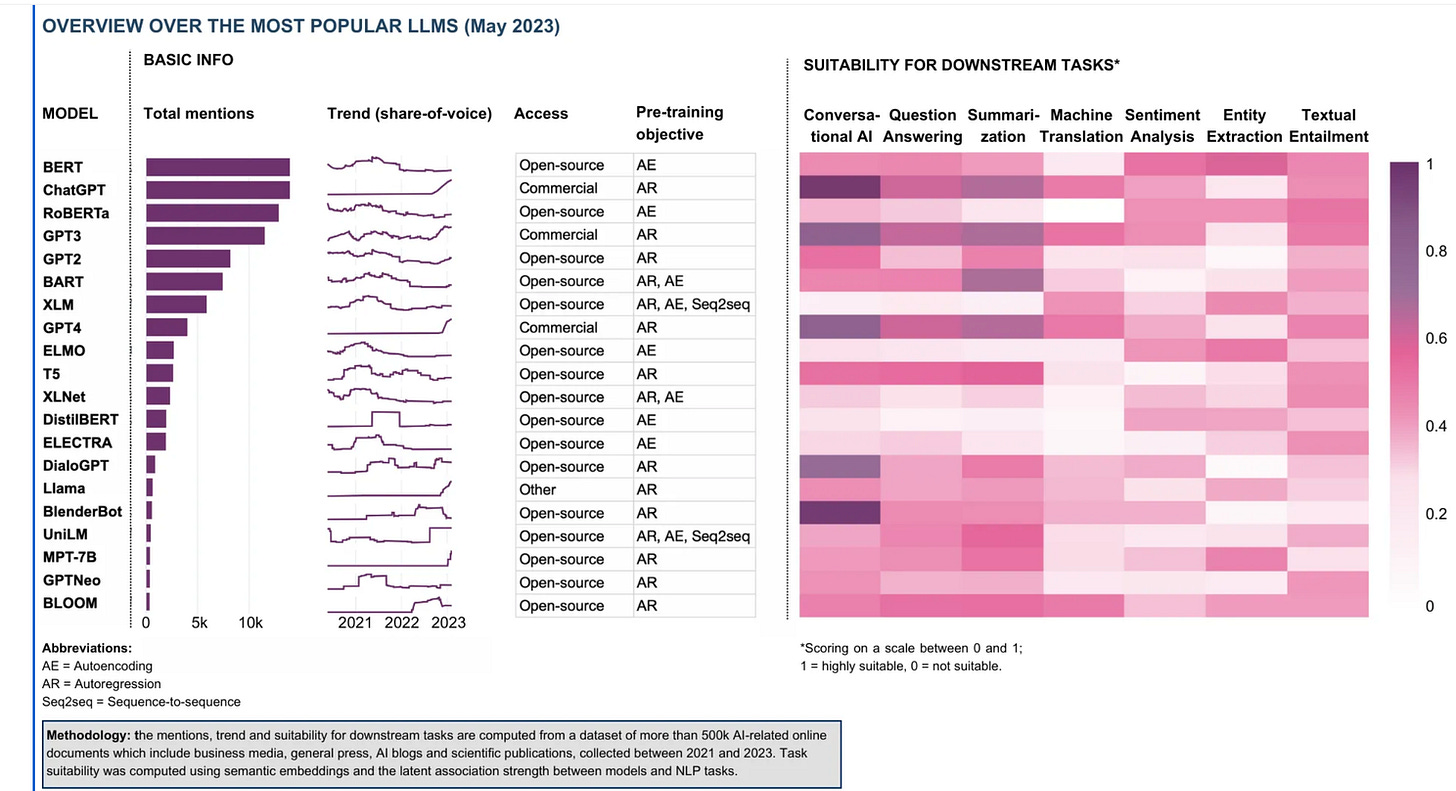

Each flavor of LLM are designed to enable unique value proposition. We cannot just take Autoregressive LLM as is like GPT4 and use it for deeper analytical tasks with private data sets. Open Source & Closed Source debate continues with Open source community drives creative methods build cost effective, simpler models as they are constrained with resources while commercial closed source models will advance with tremendous speed of innovation. Do they need to be in lock step ? Not really.

One of the best Hub’s that I use to understand the happenings on various models across multi-modal is at Hugging face Marketplace. Check it out here.

Salesforce announced their XGen 7B, a new LLM with an 8k context under the Apache 2.0 license and XGen uses the same architecture as Meta's LLaMa and is, therefore, a 1-to-1 replacement for commercial use! Trained with 1.5T tokens with 7B parameters.

Inflection AI caught my attention as I was exploring the possibility of Personalized AI to interact with. Inflection AI. the company has raised $1.3 billion in a fresh round of funding led by Microsoft, Reid Hoffman, Bill Gates, Eric Schmidt, and new investor NVIDIA. The new funding brings the total raised by the company to $1.525 billion. This aligns to the notion of building AI Guru’s with all our knowledge so they can help us to perform our tasks better.

Application Development Innovations

This space is just exploding with creativity boost given to the right side of the brain. I break it down into few categories:

With v5.2 of Midjourney Zoom Out feature, this took the world by storm including 3D extentions creators like Ilumine AI did.

The Plugin store at ChatGPT is exploding with valuable and productivity tool kits. One of my favorite right now is Visla plugin on ChatGPT Plus which creates a decent quality video stitching from a text prompt.

Google Music LLM and Meta’s voice box is another recent addition which is exciting to see touching human creativity space more closely.

One of my favorite creators @XRarchitect who recently posted AI + AR+ QR Code. unbelieve user experience and the potential to disrupt traditional marketing. Try it yourself. Scan the QR code show left of this content.

All these enable individual applications getting exercised to perform a specific task or objectives. As I have been highlighting, the trend will naturally be around interconnecting the LLM or models or applications outside the LLM domain to derive Solution Outcome. There is a new set of innovations in this space which enables creators, developers to build new capabilities. Few of the trends that has caught my attention:

Function call API from OpenAI: New way to more reliably connect GPT's capabilities with external tools and APIs.Function calling allows developers to more reliably get structured data back from the model. I started exploring with this now.

New gpt-3.5-turbo-16k model support - 16k context means the model can now support ~20 pages of text in a single request, easier cross linking.

ChatGPT plugin ecosystem is a great way to derive end outcome by inter-playing the plugins for each task and eventually combining for final outcome. My favorite outcome driven with stitching plugins: a) Watch a video and analyze (b) Draw a diagram articulating the inter-connections of what is seen and analyzed from the video c) Share your view points on this. (d) Now create a new video summary based on this analysis.

Langchain framework is enabling LLM integration frameworks and widely used in early phases of prototyping.

Many more..

In Summary, we're living in an era where AI is catalyzing innovations across multiple domains, including text, video, image, music, and language. The technology is boosting human creativity, enabling even those traditionally less creative to push their boundaries. AI-powered tools are helping writers craft better sentences, musicians compose more complex melodies, and designers generate more engaging visuals. In essence, AI isn't just eating the world; it's aiding in its recreation. With constant battle between Open Source & Commercial model race, we might end up with a Large Foundational Model with many domain specific Foundational model working closely, hopefully in harmony!

As we push this envelope, build powerful models to support complex use cases, we might have to look out for any “emergence” state from these systems. This would naturally lead to the path of AI Guru defining its goals with the new emergence of knowledge and in-turn influencing the AI Shishyas. Hopefully, we will have all the controls to guide this process for the better!